Google Professional Cloud Network Engineer

Get started today

Ultimate access to all questions.

Comments

Loading comments...

Ultimate access to all questions.

You have the following private Google Kubernetes Engine (GKE) cluster deployment:

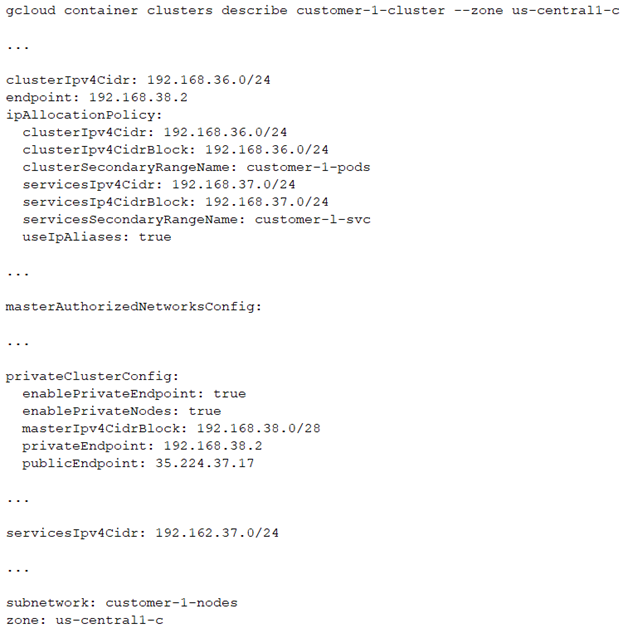

gcloud container clusters describe customer-1-cluster --zone us-centrall-c

clusterIpv4Cidr: 192.168.36.0/24 endpoint: 192.168.38.2 ipAllocationPolicy: clusterIpv4Cidr: 192.168.36.0/24 clusterIpv4CidrBlock: 192.168.36.0/24 clusterSecondaryRangeName: customer-1-pods servicesIpv4Cidr: 192.168.37.0/24 servicesIp4CidrBlock: 192.168.37.0/24 servicesSecondaryRangeName: customer-l-svc useIpAliases: true

masterAuthorizedNetworksConfig:

privateClusterConfig: enablePrivateEndpoint: true enablePrivateNodes: true masterIpv4CidrBlock: 192.168.38.0/28 privateEndpoint: 192.168.38.2 publicEndpoint: 35.224.37.17

servicesIpv4Cidr: 192.162.37.0/24

subnetwork: customer-1-nodes zone: us-centrall-c

You have a virtual machine (VM) deployed in the same VPC in the subnetwork kubernetes-management with internal IP address 192.168.40 2/24 and no external IP address assigned. You need to communicate with the cluster master using kubectl. What should you do?

A

Add the network 192.168.40.0/24 to the masterAuthorizedNetworksConfig. Configure kubectl to communicate with the endpoint 192.168.38.2.

B

Add the network 192.168.38.0/28 to the masterAuthorizedNetworksConfig. Configure kubectl to communicate with the endpoint 192.168.38.2

C

Add the network 192.168.36.0/24 to the masterAuthorizedNetworksConfig. Configure kubectl to communicate with the endpoint 192.168.38.2

D

Add an external IP address to the VM, and add this IP address in the masterAuthorizedNetworksConfig. Configure kubectl to communicate with the endpoint 35.224.37.17.