Databricks Certified Generative AI Engineer - Associate

Get started today

Ultimate access to all questions.

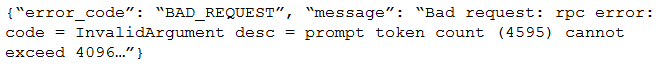

After switching the response generation LLM in a RAG pipeline from GPT-4 to a self-hosted model with a shorter context length, the following error occurs:

//IMG//

Without changing the response generation model, which TWO solutions should be implemented? (Choose two.)

After switching the response generation LLM in a RAG pipeline from GPT-4 to a self-hosted model with a shorter context length, the following error occurs: //IMG//

Without changing the response generation model, which TWO solutions should be implemented? (Choose two.)

Exam-Like

Last updated: December 4, 2025 at 14:03

A

Use a smaller embedding model to generate embeddings

7.8%

B

Reduce the maximum output tokens of the new model

Comments

Loading comments...