Microsoft Certified Azure Data Scientist Associate - DP-100

Get started today

Ultimate access to all questions.

Comments

Loading comments...

Ultimate access to all questions.

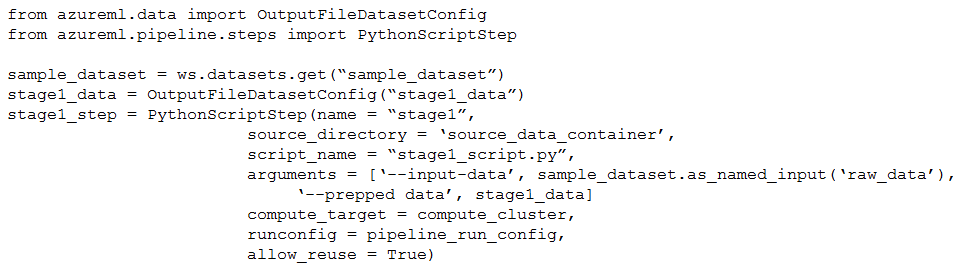

You create an Azure Machine Learning pipeline with two stages. The first stage prepares data from a dataset named sample_dataset. The second stage will use the output from the first stage to train and register a model.

The code for the first stage is provided:

from azureml.core import Dataset, Run

from azureml.data import OutputFileDatasetConfig

run = Run.get_context()

ws = run.experiment.workspace

# get input dataset

dataset = Dataset.get_by_name(ws, 'sample_dataset')

# create configuration for output dataset

output_data = OutputFileDatasetConfig(

destination = (run.get_context_data_reference('workspaceblobstore'), 'output_data/{}/'.format(run.id))

).as_mount()

# ... (data preparation logic would be here)

# The prepared data is written to the output_data path

from azureml.core import Dataset, Run

from azureml.data import OutputFileDatasetConfig

run = Run.get_context()

ws = run.experiment.workspace

# get input dataset

dataset = Dataset.get_by_name(ws, 'sample_dataset')

# create configuration for output dataset

output_data = OutputFileDatasetConfig(

destination = (run.get_context_data_reference('workspaceblobstore'), 'output_data/{}/'.format(run.id))

).as_mount()

# ... (data preparation logic would be here)

# The prepared data is written to the output_data path

You need to identify the storage location containing the output of the first stage that should be used as input for the second stage.

Which storage location should you use?

A

workspaceblobstore datastore

B

workspacefilestore datastore

C

compute instance

D

compute_cluster